Dealing with Deepfakes

Why in News?

Recent instances of deepfake videos and images circulating on social media platforms have raised concerns about spreading misinformation and confusion among the public. These deepfakes, which use artificial intelligence (AI) to manipulate or generate realistic media of real people or events, have been shared for malicious purposes, including spreading fake news and defaming individuals.

The rise of deepfakes severely threatens information credibility, individual privacy, and the legal and ethical frameworks governing digital media.

How are Deepfakes Created?

Deepfakes are created using deep learning techniques, a branch of AI that learns from large amounts of data to perform complex tasks. Standard methods for creating deepfakes include face-swapping, voice cloning, and text generation. Face-swapping replaces a person’s face in a video or image with another person’s.

Voice cloning replicates a person’s voice using another person’s or synthetic voice. Text generation produces coherent and plausible text based on a prompt or context.

How to Detect Deepfakes?

Detecting deepfakes is becoming increasingly challenging as technology improves. However, there are ways to spot deepfakes by looking for visual artifacts, audio anomalies, and semantic inconsistencies in the media elements. Manual methods involve examining glitches or distortions in videos or images, errors in audio, or logical and factual errors in text.

Automated tools that use AI for deepfake detection are also being developed, including social media rules, verification programs, research lab technologies, and competitions like the Deepfake Detection Challenge.

How to Deal with Deepfakes?

Dealing with deepfakes requires a multi-faceted approach encompassing legal, technical, and social measures. Legal measures involve:

- Enacting laws and regulations to prohibit creating and disseminating deepfakes for malicious purposes.

- Defining clear standards and penalties.

- Protecting victims.

Technical measures focus on developing effective tools and systems for detecting and removing deepfakes, enhancing media security and authenticity, and creating repositories of verified media. Social measures include:

- Raising awareness among the public.

- Promoting media literacy and critical thinking skills.

- Fostering trust and responsibility in digital media usage.

Way Forward

Deepfakes present both opportunities and challenges. While they have the potential to revolutionise various fields, they also carry risks to democracy, journalism, and justice. It is crucial to adopt a balanced and proactive approach, harnessing the benefits of deep fakes while minimising their harm. This requires collaboration among governments, media platforms, civil society organisations, researchers, and responsible AI and digital media usage by all stakeholders.

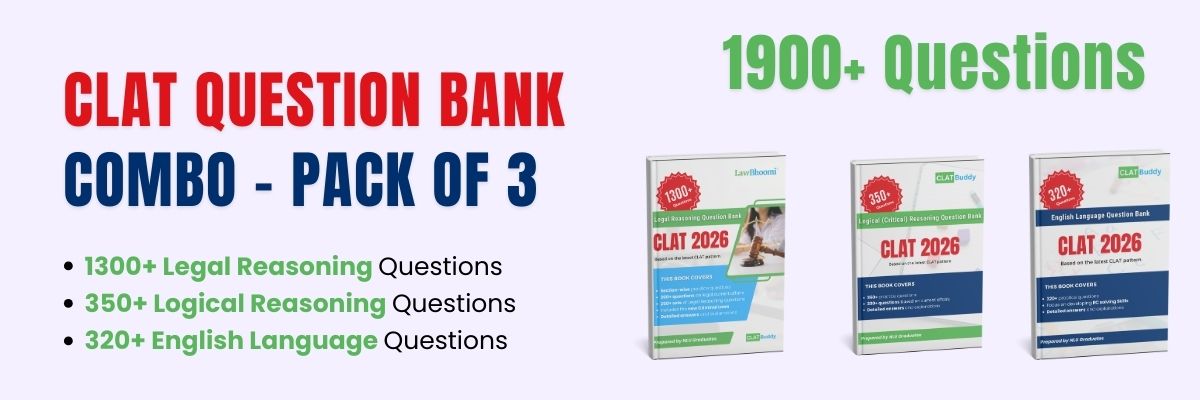

Calling all law aspirants!

Are you exhausted from constantly searching for study materials and question banks? Worry not!

With over 15,000 students already engaged, you definitely don't want to be left out.

Become a member of the most vibrant law aspirants community out there!

It’s FREE! Hurry!

Join our WhatsApp Groups (Click Here) and Telegram Channel (Click Here) today, and receive instant notifications.